Most enterprises don’t have a Salesforce problem. They have a multi-cloud problem that happens to run on Salesforce.

Sales Cloud for pipeline. Service Cloud for support. Marketing Cloud for campaigns. Commerce Cloud for transactions. Each cloud works perfectly in isolation. The architecture breaks when you try to unify them. Customer data fragments across orgs. Integration layers become bottlenecks. Governor limits hit at scale. The technical debt compounds until a single change requires coordinating across five teams.

The solution isn’t more integration middleware. It’s understanding which salesforce multi-cloud architecture patterns actually survive contact with enterprise constraints, and which ones collapse under their own complexity.

The Multi-Cloud Unification Problem

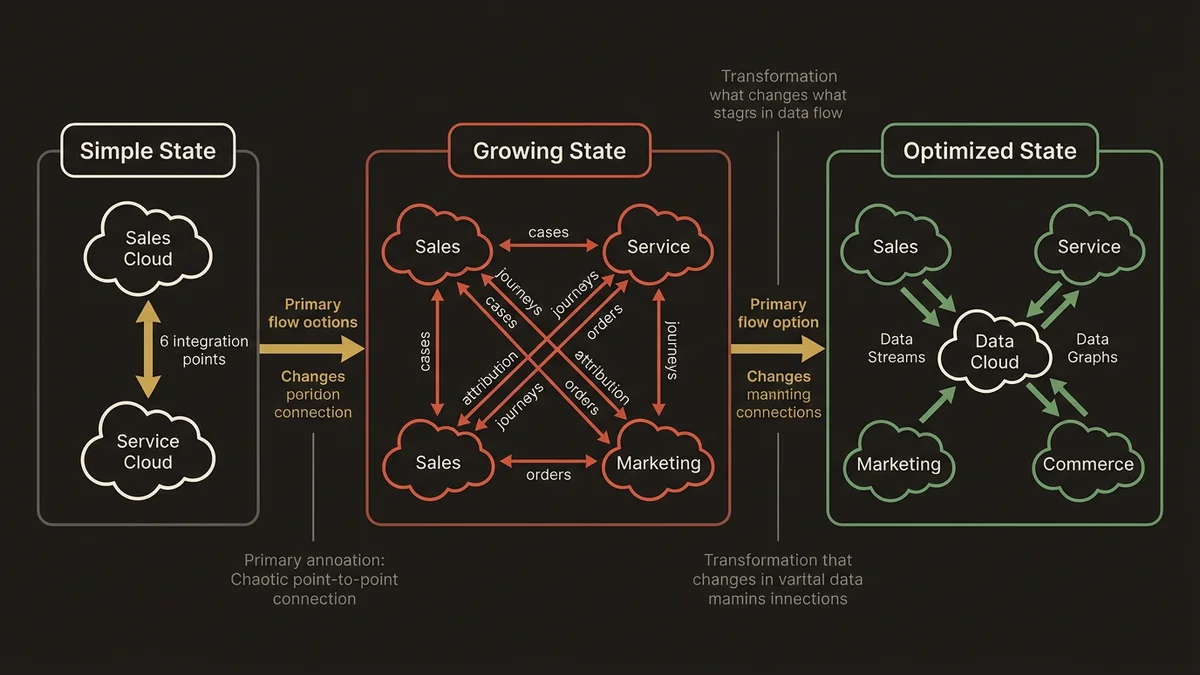

Here’s what breaks at scale: point-to-point integrations.

An org with Sales Cloud and Service Cloud starts simple. Build an API integration. Cases reference Opportunities. Accounts sync bidirectionally. It works for 500 users.

Then Marketing Cloud enters. Now you need customer journey data flowing to Service for context. Service needs to trigger journey exits. Sales needs campaign attribution. You’re at six integration points.

Add Commerce Cloud. Order data needs to flow to Sales (revenue recognition), Service (returns), Marketing (abandoned cart), and Data Cloud (unified profile). You’re at 15 integration points. Each one has its own error handling, retry logic, and monitoring. Each one is a failure point.

The architecture that works here is hub-and-spoke with Data Cloud as the system of record for customer state. Not because Data Cloud is magic. Because it’s the only component designed to handle the data volume and cardinality that multi-cloud creates.

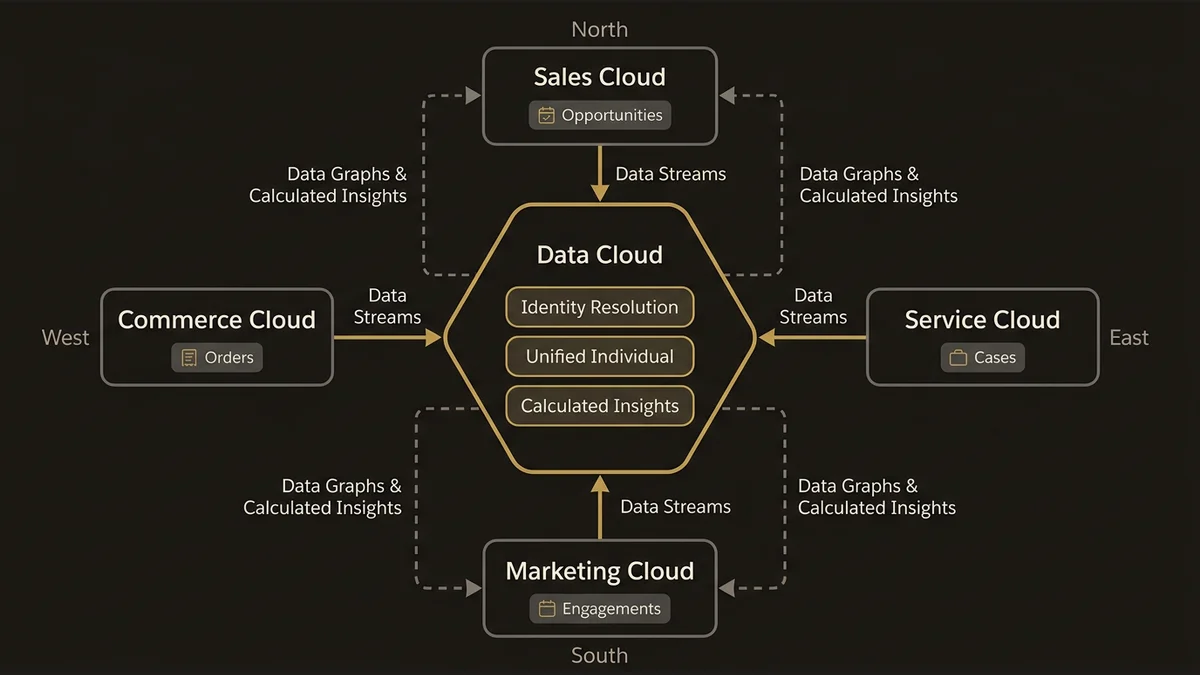

Pattern 1: Data Cloud as Integration Hub

Data Cloud isn’t a CDP. It’s an integration layer that happens to store customer profiles.

The pattern: each cloud writes to Data Cloud via Data Streams. Data Cloud becomes the source of truth for customer state. Other clouds read from Data Cloud via Data Graphs and Calculated Insights.

Why this works:

- Data Streams handle 50M+ records/day ingestion without custom API limits

- Data Graphs pre-compute joins across clouds (no runtime query overhead)

- Identity Resolution creates Unified Individual across all touchpoints

- Calculated Insights push computed metrics back to source clouds

Implementation specifics:

- Sales Cloud: Opportunity data flows via Data Stream → DMO → Data Graph

- Service Cloud: Case data flows via Data Stream → linked to Unified Individual

- Marketing Cloud: Engagement data via Marketing Cloud Connect → Data Stream

- Commerce Cloud: Order data via MuleSoft → Data Stream (Commerce doesn’t have native connector yet)

The critical architectural decision: what data lives in Data Cloud vs. source clouds. The rule: transactional data stays in source clouds. Customer state and cross-cloud aggregations live in Data Cloud.

In practice, this means Opportunities stay in Sales Cloud. But “customer lifetime value across all clouds” lives in Data Cloud as a Calculated Insight, then syncs back to Sales Cloud for territory planning.

Pattern 2: Event-Driven State Synchronization

Point-to-point REST APIs don’t scale past three clouds. Platform Events do.

The pattern: state changes publish to Platform Events. Subscribers in each cloud react independently. No orchestration layer. No central coordinator that becomes a bottleneck.

Architecture:

- Sales Cloud: Opportunity stage change → Platform Event

- Service Cloud: Subscriber creates Case if stage = “Closed Lost” and reason = “Product Issue”

- Marketing Cloud: Subscriber triggers win/loss nurture journey

- Data Cloud: Subscriber updates Unified Individual with latest deal state

Why this survives scale:

- Platform Events handle 250K+ events/day per org (standard allocation)

- Subscribers process independently (no cascading failures)

- Replay capability for failed processing (24-hour retention)

- No API limits consumed (event delivery is separate allocation)

The failure mode most architects miss: event ordering. Platform Events guarantee delivery, not order. If your logic depends on “Opportunity created, then updated, then closed” arriving in sequence, you need sequence numbers in the event payload and idempotent subscribers.

A common pattern in enterprise orgs: embed a timestamp and version number in every event. Subscribers check “is this event newer than my last processed event?” before applying changes. Prevents out-of-order events from reverting state.

Pattern 3: Federated Identity with Unified Profile

Multi-cloud breaks when each cloud has its own concept of “customer.”

Sales Cloud knows them as a Lead, then Contact. Service Cloud knows them as a Contact with Cases. Marketing Cloud knows them as a Subscriber. Commerce Cloud knows them as a Shopper. Same human, four identities, zero unified view.

The architecture that works: Identity Resolution in Data Cloud with federated lookups.

Implementation:

- Data Cloud ingests identity data from all clouds via Data Streams

- Identity Resolution rulesets match across email, phone, loyalty ID, cookie ID

- Creates Unified Individual (single customer record)

- Each cloud maintains its own objects but links to Unified Individual ID

Critical ruleset design:

- Exact match on email (high confidence)

- Fuzzy match on name + address (medium confidence, requires review)

- Cookie-to-email linkage via Marketing Cloud (low confidence, time-decayed)

- Manual merge capability for edge cases

The problem isn’t creating the Unified Individual. The problem is keeping it unified as data changes. A customer updates their email in Commerce Cloud. That needs to flow to Data Cloud, trigger Identity Resolution re-evaluation, potentially merge two previously separate profiles, and propagate the new Unified Individual ID back to all clouds.

In practice, this requires Data Streams with near-real-time ingestion (5-minute latency) and Flow orchestration in each cloud to handle Unified Individual ID updates.

Pattern 4: Selective Data Replication

Not all data belongs in all clouds. Most multi-cloud architectures fail because they try to replicate everything everywhere.

The pattern: replicate only the minimum data required for each cloud’s function. Use Data Cloud Data Graphs for cross-cloud queries instead of replicating into source clouds.

Decision framework:

- Replicate if the cloud needs to write back to the data (Service Cloud needs Account data to update Cases)

- Data Graph if the cloud only needs to read for context (Service Cloud needs to display Opportunity pipeline but never updates it)

- Calculated Insight if the cloud needs an aggregation (Sales Cloud needs “total support cases last 90 days” but not individual Case records)

Example architecture for Service Cloud:

- Replicate: Account, Contact (Service agents update these)

- Data Graph: Opportunity pipeline, Marketing engagement history (read-only context)

- Calculated Insight: Customer lifetime value, product usage metrics (pre-computed aggregations)

This reduces data volume in Service Cloud by 70% while maintaining full context. Agents see everything they need. The org doesn’t hit storage limits. Queries stay fast because you’re not joining across millions of replicated records.

The failure mode: trying to use Data Graphs for real-time operational data. Data Graphs refresh on a schedule (fastest is hourly). If Service agents need to see Opportunities updated in the last 5 minutes, you need replication, not Data Graphs.

Pattern 5: Orchestrated Cross-Cloud Processes

Some business processes span multiple clouds. Order-to-cash: Commerce Cloud creates order → Sales Cloud recognizes revenue → Service Cloud handles fulfillment issues → Marketing Cloud triggers post-purchase journey.

The pattern: Flow orchestration in a designated “process owner” cloud with External Services calling other clouds.

Architecture:

- Commerce Cloud is process owner (order creation triggers everything)

- Flow in Commerce Cloud orchestrates the sequence

- External Services call Sales Cloud REST API (create Opportunity)

- External Services call Service Cloud REST API (create fulfillment Case if needed)

- Platform Event to Marketing Cloud (trigger journey)

Why Flow instead of MuleSoft:

- Flow handles 90% of orchestration needs without external middleware

- External Services consume API limits but stay within governor limits at scale

- Flow error handling and retry logic is built-in

- No additional infrastructure to maintain

The critical design decision: which cloud owns the process. The rule: the cloud where the process starts owns orchestration. Don’t try to orchestrate from Data Cloud (it’s not designed for this). Don’t try to orchestrate from a “neutral” integration layer (creates a bottleneck).

In practice, this means Commerce Cloud orchestrates order-to-cash. Sales Cloud orchestrates quote-to-cash. Service Cloud orchestrates case-to-resolution. Each cloud handles its own domain, calls other clouds as needed, publishes events for downstream processes.

Key Takeaways

- Data Cloud as integration hub eliminates point-to-point complexity but requires careful data modeling (what lives where)

- Platform Events enable event-driven architecture that scales past three clouds without central orchestration bottlenecks

- Identity Resolution creates Unified Individual but requires near-real-time data streams and Flow orchestration to maintain consistency

- Selective replication (replicate vs. Data Graph vs. Calculated Insight) reduces data volume by 70% while maintaining full context

- Flow orchestration in the process-owner cloud handles 90% of cross-cloud processes without external middleware

The architecture that survives enterprise scale isn’t the one with the most sophisticated integration layer. It’s the one that minimizes integration points, uses platform-native capabilities, and makes explicit decisions about data ownership.

Need help with data cloud & multi-cloud architecture?

Unify customer data across Salesforce clouds with Data Cloud, build identity resolution models, and architect multi-cloud systems that actually work together.

Related Articles

Single vs Multi-Org: The Real Tradeoffs

Choosing your Salesforce org strategy shapes every integration, data model, and AI initiative for years. Here's how to make the right call architecturally.

Multi-Cloud Integration Design That Works

Sales, Service, and Marketing Cloud integration design fails when treated as a connector problem. Here's the architecture that actually holds at scale.

Intégration Multi-Cloud Salesforce MuleSoft

Comment architecturer l'intégration multi-cloud Salesforce MuleSoft sans créer une dette technique invisible. Patterns concrets, pièges et décisions clés.